The way we interact with real-world visual data is changing. Until now, reviewing footage or identifying real-time conditions required specialized tools, manual tagging, and endless data sorting. That ends today. Just as Google made the internet searchable, we’re doing the same for the real world.

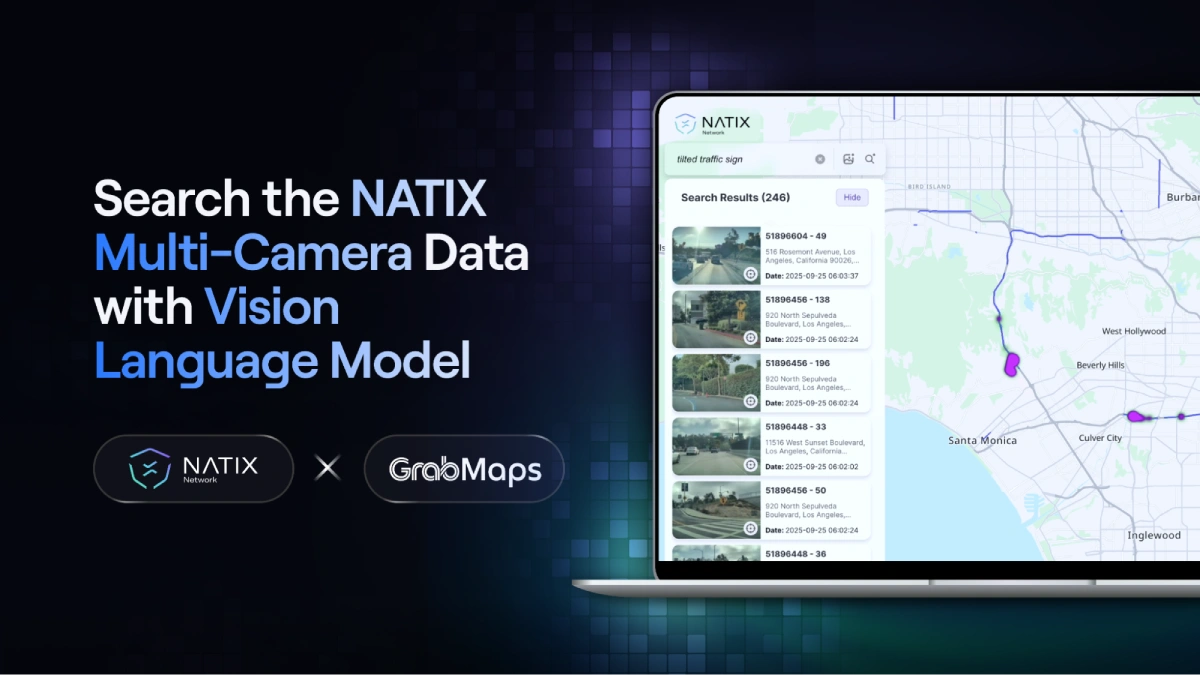

We are proud to announce the launch of the NATIX WorldSeek, a breakthrough platform, powered by GrabMaps, that makes the real-world street imagery searchable through an advanced AI.

Built using GrabMaps’ AI technology and starting with NATIX VX360 data (with additional imagery sources to be added next, including partner SLI and other multi-sensor datasets), the VLM enables users to explore and analyze real-world environments by simply typing in what they are looking for.

The launch of the NATIX WorldSeek expands the partnership between NATIX and GrabMaps, marking a new era in real-world intelligence. For the first time, anyone can search and analyze the physical world as effortlessly as typing a query online, unlocking a visual understanding of our cities, roads, and environments with pinpoint accuracy. Users can search for any keyword (e.g., “potholes” or “roadwork”) and the model will instantly return corresponding street-level imagery, location data, and additional information. The AI-based agent also handles complex queries with precision (e.g., “skateboarder next to a Tesla”), and the only limit is your imagination. If the input exists in the database, the AI will surface it.

This capability changes everything for teams that need to understand real-world environments.

Transportation departments can instantly find every “heavy traffic” zone in a city, automotive teams can search for region-specific street signs, and cities can pull up every clip showing “potholes in the middle of the street.”

Work that once required manually reviewing thousands of hours of video can now happen in seconds.

A Visual Language Model (VLM) is a new class of generative AI system that understands both language and imagery, linking text-based queries to real-world visuals. In simple terms, it’s an AI that can see what you mean. When a user searches for “skateboard and Tesla,” or “bus and pedestrian,” the model doesn’t rely on manual labels; it visually identifies those features within NATIX’s real-world 360° footage.

A VLM is dynamic and context-aware. It visually interprets materials, behaviors, and relationships within a scene. For Physical AI — robots and autonomous vehicles that perceive and act in the real world — this ability is fundamental. Physical AI systems need to interpret visual context as fluidly as language: recognizing objects, anticipating motion, and reasoning about spatial relationships. A VLM provides exactly that connective tissue, translating the language we use to describe the world into the visual evidence those systems must understand.

Through a simple interface, users can type any natural-language query, and the system immediately returns relevant visual results from NATIX’s VX360 database, complete with timestamps and coordinates. At GrabMaps, the VLM technology was first used to analyze and search street-level imagery across Southeast Asia. By integrating NATIX data into that framework, we’re scaling this intelligence globally — transforming millions of kilometers of real-world imagery into a searchable feature.

By design, the system ingests vast amounts of geo-tagged imagery, from dashcams, drones, and street-level cameras, and uses vision-language models to semantically index this data, bridging the gap between how we speak about the world and how machines see it. The result is an AI-accessible interface for the physical world, searchable by object, scene, signage, landmarks, and beyond. From infrastructure management to identifying edge cases for Physical AI development, WorldSeek redefines how AI observes, organizes, and ultimately understands the world.

This launch is the first step. In the future, we will use the same technology to automatically discover and retrieve edge cases across our video database (e.g., “a pedestrian emerging from behind a bus,” “vehicle skidding during a lane change,” “slanted stop sign at dusk”) to accelerate autonomous driving training and validation.

WorldSeek is a step toward a broader transformation in how physical environments are digitized and understood. Organizations that rely on real-world data have long faced the limitations of manual review and fragmented tooling. A searchable interface for global imagery changes the speed at which insights can be extracted and decisions can be made.

As NATIX grows its data engine and brings additional partners into the ecosystem, WorldSeek will evolve into a powerful layer of real-world intelligence. It will help improve maps, support safer autonomous systems, and give developers a new way to work with large-scale visual data. The future of Physical AI depends on access to high-quality, interpretable real-world information. WorldSeek creates that access.